Lehmler, Stephan Johann; Saif-ur-Rehman, Muhammad; Glasmachers, Tobias; Iossifidis, Ioannis In: Neurocomputing, S. 128473, 2024, ISSN: 0925-2312. Abstract | Links | BibTeX | Schlagwörter: Artificial neural networks, Generalization, Machine Learning, Memorization, Poisson process, Stochastic modeling2024

@article{lehmlerUnderstandingActivationPatterns2024,

title = {Understanding Activation Patterns in Artificial Neural Networks by Exploring Stochastic Processes: Discriminating Generalization from Memorization},

author = {Stephan Johann Lehmler and Muhammad Saif-ur-Rehman and Tobias Glasmachers and Ioannis Iossifidis},

editor = {Elsevier},

url = {https://www.sciencedirect.com/science/article/pii/S092523122401244X},

doi = {10.1016/j.neucom.2024.128473},

issn = {0925-2312},

year = {2024},

date = {2024-09-19},

urldate = {2024-09-19},

journal = {Neurocomputing},

pages = {128473},

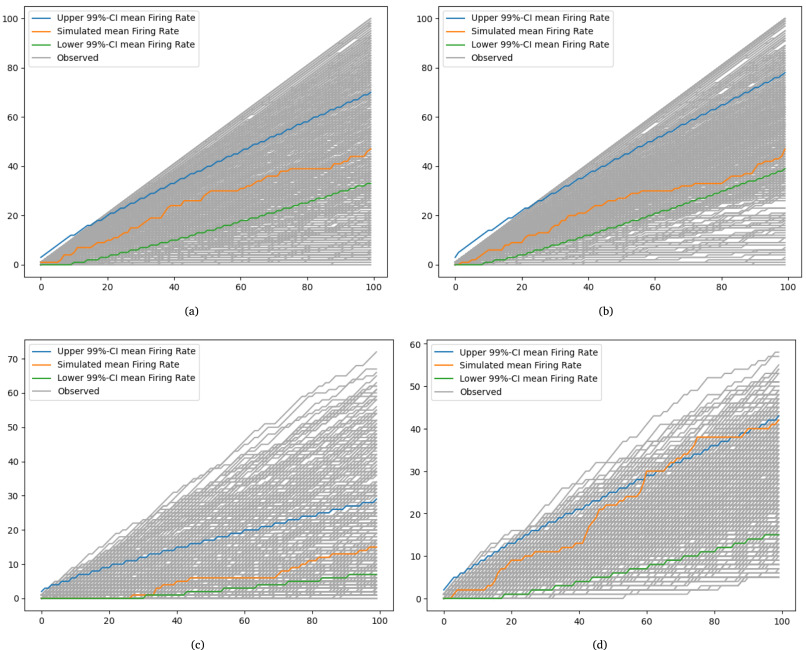

abstract = {To gain a deeper understanding of the behavior and learning dynamics of artificial neural networks, mathematical abstractions and models are valuable. They provide a simplified perspective and facilitate systematic investigations. In this paper, we propose to analyze dynamics of artificial neural activation using stochastic processes, which have not been utilized for this purpose thus far. Our approach involves modeling the activation patterns of nodes in artificial neural networks as stochastic processes. By focusing on the activation frequency, we can leverage techniques used in neuroscience to study neural spike trains. Specifically, we extract the activity of individual artificial neurons during a classification task and model their activation frequency. The underlying process model is an arrival process following a Poisson distribution.We examine the theoretical fit of the observed data generated by various artificial neural networks in image recognition tasks to the proposed model’s key assumptions. Through the stochastic process model, we derive measures describing activation patterns of each network. We analyze randomly initialized, generalizing, and memorizing networks, allowing us to identify consistent differences in learning methods across multiple architectures and training sets. We calculate features describing the distribution of Activation Rate and Fano Factor, which prove to be stable indicators of memorization during learning. These calculated features offer valuable insights into network behavior. The proposed model demonstrates promising results in describing activation patterns and could serve as a general framework for future investigations. It has potential applications in theoretical simulation studies as well as practical areas such as pruning or transfer learning.},

keywords = {Artificial neural networks, Generalization, Machine Learning, Memorization, Poisson process, Stochastic modeling},

pubstate = {published},

tppubtype = {article}

}