Lehrgebiet: Theoretische Informatik und künstliche Intelligenz

Büro: 01.214

Labor: 04.105

Telefon: +49 208 88254-806

E-Mail:

🛜 http://lab.iossifidis.net

Ioannis Iossifidis studierte Physik (Schwerpunkt: theoretische Teilchenphysik) an der Universität Dortmund und promovierte 2006 an der Fakultät für Physik und Astronomie der Ruhr-Universität Bochum.

Am Institut für Neuroinformatik leitete Prof. Dr. Iossifidis die Arbeitsgruppe Autonome Robotik und nahm mit seiner Forschungsgruppe erfolgreich an zahlreichen, vom BmBF und der EU, geförderten Forschungsprojekten aus dem Bereich der künstlichen Intelligenz teil. Seit dem 1. Oktober 2010 arbeitet er an der HRW am Institut Informatik und hält den Lehrstuhl für Theoretische Informatik – Künstliche Intelligenz.

Prof. Dr. Ioannis Iossifidis entwickelt seit über 20 Jahren biologisch inspirierte anthropomorphe, autonome Robotersysteme, die zugleich Teil und Ergebnis seiner Forschung im Bereich der rechnergestützten Neurowissenschaften sind. In diesem Rahmen entwickelte er Modelle zur Informationsverarbeitung im menschlichen Gehirn und wendete diese auf technische Systeme an.

Ausgewiesene Schwerpunkte seiner wissenschaftlichen Arbeit der letzten Jahre sind die Modellierung menschlicher Armbewegungen, der Entwurf von sogenannten «Simulierten Realitäten» zur Simulation und Evaluation der Interaktionen zwischen Mensch, Maschine und Umwelt sowie die Entwicklung von kortikalen exoprothetischen Komponenten. Entwicklung der Theorie und Anwendung von Algorithmen des maschinellen Lernens auf Basis tiefer neuronaler Architekturen bilden das Querschnittsthema seiner Forschung.

Ioannis Iossifidis’ Forschung wurde u.a. mit Fördermitteln im Rahmen großer Förderprojekte des BmBF (NEUROS, MORPHA, LOKI, DESIRE, Bernstein Fokus: Neuronale Grundlagen des Lernens etc.), der DFG («Motor‐parietal cortical neuroprosthesis with somatosensory feedback for restoring hand and arm functions in tetraplegic patients») und der EU (Neural Dynamics – EU (STREP), EUCogII, EUCogIII ) honoriert und gehört zu den Gewinnern der Leitmarktwettbewerbe Gesundheit.NRW und IKT.NRW 2019.

ARBEITS- UND FORSCHUNGSSCHWERPUNKTE

- Computational Neuroscience

- Brain Computer Interfaces

- Entwicklung kortikaler exoprothetischer Komponenten

- Theorie neuronaler Netze

- Modellierung menschlicher Armbewegungen

- Simulierte Realität

WISSENSCHAFTLICHE EINRICHTUNGEN

- Labor mit Verlinkung

- ???

- ???

LEHRVERANSTALTUNGEN

- ???

- ???

- ???

PROJEKTE

- Projekt mit Verlinkung

- ???

- ???

WISSENSCHAFTLICHE MITARBEITER*INNEN

Felix Grün

Büro: 02.216 (Campus Bottrop)

Marie Schmidt

Büro: 02.216 (Campus Bottrop)

Aline Xavier Fidencio

Gastwissenschaftlerin

Muhammad Ayaz Hussain

Doktorand

Tim Sziburis

Doktorand

Farhad Rahmat

studentische Hilfskraft

GOOGLE SCHOLAR PROFIL

Artikel

Fidêncio, Aline Xavier; Klaes, Christian; Iossifidis, Ioannis

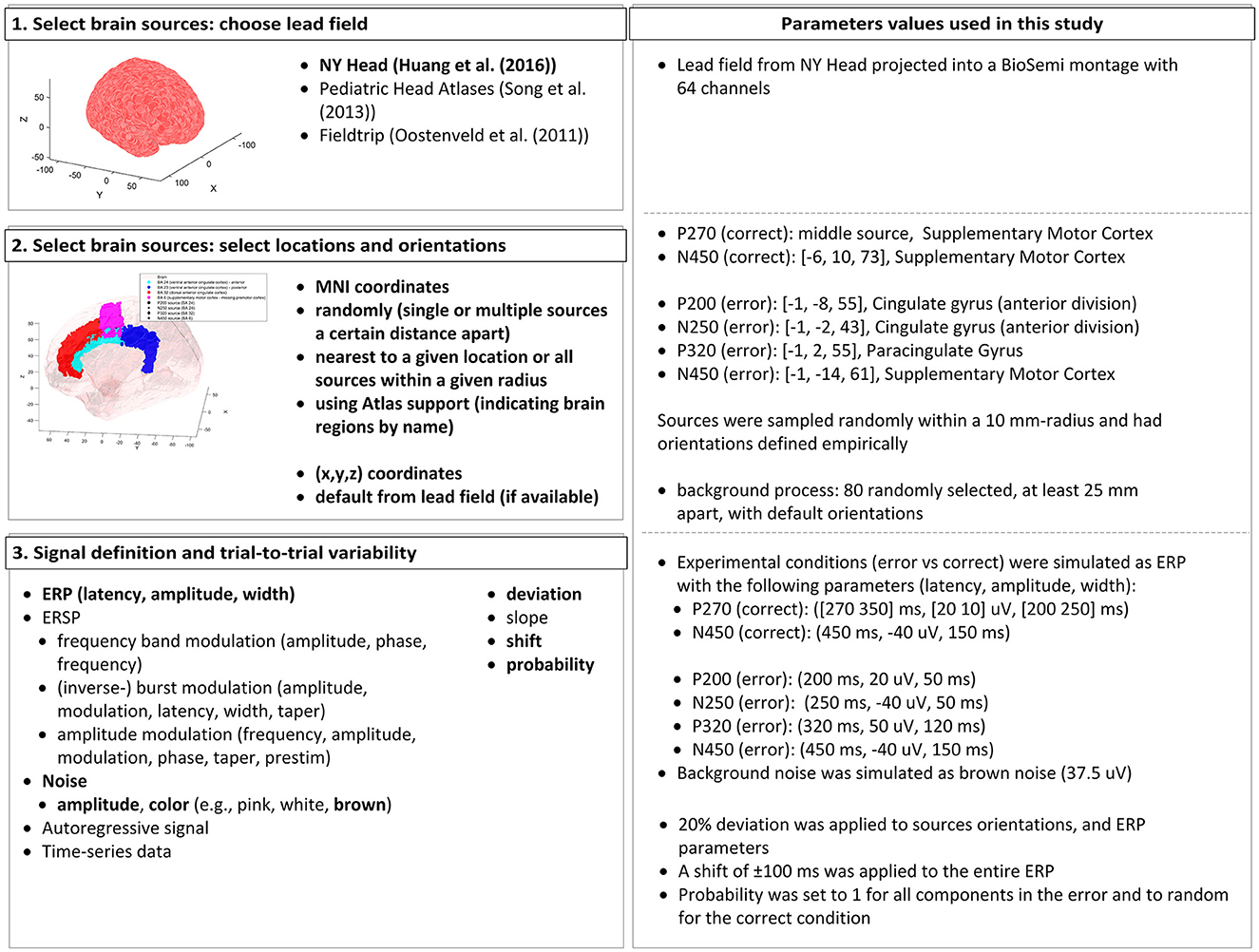

A Generic Error-Related Potential Classifier Based on Simulated Subjects Artikel

In: Frontiers in Human Neuroscience, Bd. 18, S. 1390714, 2024, ISSN: 1662-5161.

Abstract | Links | BibTeX | Schlagwörter: adaptive brain-machine (computer) interface, BCI, EEG, Error-related potential (ErrP), ErrP classifier, Generic decoder, Machine Learning, SEREEGA, Simulation

@article{xavierfidencioGenericErrorrelatedPotential2024,

title = {A Generic Error-Related Potential Classifier Based on Simulated Subjects},

author = {Aline Xavier Fidêncio and Christian Klaes and Ioannis Iossifidis},

editor = {Frontiers Media SA},

url = {https://www.frontiersin.org/journals/human-neuroscience/articles/10.3389/fnhum.2024.1390714/full},

doi = {10.3389/fnhum.2024.1390714},

issn = {1662-5161},

year = {2024},

date = {2024-07-19},

urldate = {2024-07-19},

journal = {Frontiers in Human Neuroscience},

volume = {18},

pages = {1390714},

publisher = {Frontiers},

abstract = {$<$p$>$Error-related potentials (ErrPs) are brain signals known to be generated as a reaction to erroneous events. Several works have shown that not only self-made errors but also mistakes generated by external agents can elicit such event-related potentials. The possibility of reliably measuring ErrPs through non-invasive techniques has increased the interest in the brain-computer interface (BCI) community in using such signals to improve performance, for example, by performing error correction. Extensive calibration sessions are typically necessary to gather sufficient trials for training subject-specific ErrP classifiers. This procedure is not only time-consuming but also boresome for participants. In this paper, we explore the effectiveness of ErrPs in closed-loop systems, emphasizing their dependency on precise single-trial classification. To guarantee the presence of an ErrPs signal in the data we employ and to ensure that the parameters defining ErrPs are systematically varied, we utilize the open-source toolbox SEREEGA for data simulation. We generated training instances and evaluated the performance of the generic classifier on both simulated and real-world datasets, proposing a promising alternative to conventional calibration techniques. Results show that a generic support vector machine classifier reaches balanced accuracies of 72.9%, 62.7%, 71.0%, and 70.8% on each validation dataset. While performing similarly to a leave-one-subject-out approach for error class detection, the proposed classifier shows promising generalization across different datasets and subjects without further adaptation. Moreover, by utilizing SEREEGA, we can systematically adjust parameters to accommodate the variability in the ErrP, facilitating the systematic validation of closed-loop setups. Furthermore, our objective is to develop a universal ErrP classifier that captures the signal's variability, enabling it to determine the presence or absence of an ErrP in real EEG data.$<$/p$>$},

keywords = {adaptive brain-machine (computer) interface, BCI, EEG, Error-related potential (ErrP), ErrP classifier, Generic decoder, Machine Learning, SEREEGA, Simulation},

pubstate = {published},

tppubtype = {article}

}

Konferenzen

Noth, S; Iossifidis, Ioannis

Benefits of ego motion feedback for interactive experiments in virtual reality scenarios Konferenz

BC11 : Computational Neuroscience $backslash$& Neurotechnology Bernstein Conference $backslash$& Neurex Annual Meeting 2011, 2011.

BibTeX | Schlagwörter: Machine Learning, Robotics, simulated reality, Simulation, virtual reality

@conference{Noth2011a,

title = {Benefits of ego motion feedback for interactive experiments in virtual reality scenarios},

author = {S Noth and Ioannis Iossifidis},

year = {2011},

date = {2011-01-01},

urldate = {2011-01-01},

booktitle = {BC11 : Computational Neuroscience $backslash$& Neurotechnology Bernstein Conference $backslash$& Neurex Annual Meeting 2011},

keywords = {Machine Learning, Robotics, simulated reality, Simulation, virtual reality},

pubstate = {published},

tppubtype = {conference}

}

Proceedings Articles

Noth, Sebastian; Edelbrunner, Johann; Iossifidis, Ioannis

A Versatile Simulated Reality Framework: From Embedded Components to ADAS Proceedings Article

In: International Conference on Pervasive and Embedded and Communication Systems, 2012, PECCS2012, 2012.

BibTeX | Schlagwörter: Machine Learning, Robotics, simulated reality, Simulation, virtual reality

@inproceedings{Noth2012b,

title = {A Versatile Simulated Reality Framework: From Embedded Components to ADAS},

author = {Sebastian Noth and Johann Edelbrunner and Ioannis Iossifidis},

year = {2012},

date = {2012-01-01},

booktitle = {International Conference on Pervasive and Embedded and Communication Systems, 2012, PECCS2012},

keywords = {Machine Learning, Robotics, simulated reality, Simulation, virtual reality},

pubstate = {published},

tppubtype = {inproceedings}

}

Noth, Sebastian; Iossifidis, Ioannis

Simulated reality environment for development and assessment of cognitive robotic systems Proceedings Article

In: Proc. IEEE/RSJ International Conference on Robotics and Biomimetics (RoBio2011), 2011.

Abstract | BibTeX | Schlagwörter: Machine Learning, Robotics, Simulation, virtual reality

@inproceedings{Noth2011,

title = {Simulated reality environment for development and assessment of cognitive robotic systems},

author = {Sebastian Noth and Ioannis Iossifidis},

year = {2011},

date = {2011-01-01},

urldate = {2011-01-01},

booktitle = {Proc. IEEE/RSJ International Conference on Robotics and Biomimetics (RoBio2011)},

abstract = {Simulated reality environment incorporating humans and physically plausible behaving robots, providing natural interaction channels, with the option to link simulator to real perception and motion, is gaining importance for the development of cognitive, intuitive interacting and collaborating robotic systems.

In the present work we introduce a head tracking system which is utilized to incorporate human ego motion in simulated environment improving immersion in the context of human-robot collaborative tasks.},

keywords = {Machine Learning, Robotics, Simulation, virtual reality},

pubstate = {published},

tppubtype = {inproceedings}

}

In the present work we introduce a head tracking system which is utilized to incorporate human ego motion in simulated environment improving immersion in the context of human-robot collaborative tasks.

Noth, Sebastian; Schrowangen, Eva; Iossifidis, Ioannis

Using ego motion feedback to improve the immersion in virtual reality environments Proceedings Article

In: ISR / ROBOTIK 2010, Munich, Germany, 2010.

Abstract | BibTeX | Schlagwörter: Autonomous Robotics, head tracking, Simulation, virtual reality}

@inproceedings{Noth2010,

title = {Using ego motion feedback to improve the immersion in virtual reality environments},

author = {Sebastian Noth and Eva Schrowangen and Ioannis Iossifidis},

year = {2010},

date = {2010-01-01},

booktitle = {ISR / ROBOTIK 2010},

address = {Munich, Germany},

abstract = {To study driver behavior we set up a lab with fixed base driving simulators. In order to compensate for the lack of physical feedback in this scenario, we aimed for another means of increasing the realism of our system. In the following, we propose an efficient method of head tracking and its integration in our driving simulation. Furthermore, we illuminate why this is a promising boost of the subjects immersion in the virtual world. Our idea for increasing the feeling of immersion is to give the subject feedback on head movements relative to the screen. A real driver sometimes moves his head in order to see something better or to look behind an occluding object. In addition to these intentional movements, a study conducted by Zirkovitz and Harris has revealed that drivers involuntarily tilt their heads when they go around corners in order to maximize the use of visual information available in the scene. Our system reflects the visual changes of any head movement and hence gives feedback on both involuntary and intentional motion. If, for example, subjects move to the left, they will see more from the right-hand side of the scene. If, on the other hand, they move upwards, a larger fraction of the engine hood will be visible. The same holds for the rear view mirror.},

keywords = {Autonomous Robotics, head tracking, Simulation, virtual reality}},

pubstate = {published},

tppubtype = {inproceedings}

}